We are glad to be your full time Computer company in Erie, CO Colorado. We have been in Erie, Colorado from 2003 to 2015. We are now close by in Longmont, CO still servicing Erie CO regularly. Call us for a appointment in Erie Colorado. Providing Computer Repair, upgrades, sales, installations, troubleshooting, networking, internet help, Virus removal, and training.

All posts

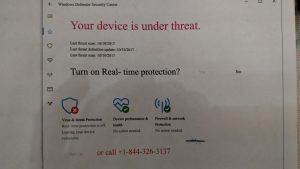

Microsoft SCAM Solved

I went to fix a computer from a customer in Erie, Colorado who got scammed from someone that took over their computer on remote access saying they were from Microsoft.

I traced the steps.Very interesting what they did they use the command prompt to put fake commands in saying that hackers were infiltrating your system and they needed to pay money to fix the issue. They said they were from Microsoft and need to fix the problems created by the hackers.

There are no hackers they put fake messages in certain places where you check the system for errors. Here’s a printout of the Windows command prompt with bogus information

People who are not technicians are fooled by this. but this is a command prompt this is not a error screen. That’s why it says it’s an unrecognized command Copying and pasting bogus error information in the command prompt you supposed to only be typing commands People get confused by this who don’t know about computers.

Saying that you must install Microsoft services at $1.54 a piece 198 times for each service. Then they take the credit card information charge your credit card for that and God knows for what else. They also did other things working very fast having the customer do things on the computer to distract your attention and having a lot of pop-up screens. While taking over the computer with remote access.

I was able to undo any damage they caused and get the computer back up and running like before. So in the end I fixed the issue. But people need to call Computer Physicians if they get a problem with their computer so that they don’t cause more issues or problems. This hacker could have done worse if the customer did not call Longmont Computer Physicians to come solve the issue.

All posts

Computer Networks in Longmont Denver Erie Colorado Computer Physicians

Networking is one of the jobs that Longmont Computer Physicians, LLC does to help it’s clients. Sometimes it is wireless networks, other times the client wants a wired computer network.

I needed to hard wire an entire house with CAT5e cabling for a client a few months ago for internet and file sharing access. It was a great success! 8 rooms in the house had access to a network cable for computers.

Here are some pictures of the job of the patch cables and routers running into the house and through the walls.

PC Computer Networking in Longmont, Boulder, Denver, Erie Colorado

All posts

Computer Repair Windows update in Longmont, Boulder, CO

Our Longmont Computer Physicians, LLC office computer had an interesting issue recently I thought I would share:

After an automatic installing of windows 10 update for Valentine’s Day Feb 14, 2018 (KB4074588) my USB keyboard on my desktop computer would no longer work. I tried 3 different USB keyboards – none worked. So I went into device manager to uninstall, reinstall, and update the keyboard drivers. That did not work. So then I uninstalled the windows update. This fixed the problem, but the update would try to install again the next time I reboot. So I set the windows update to never install hardware drivers during the update in (system properties) I would need to choose what driver update I want manually from now on.

Computer Physicians provides PC computer networking, repair, Data Recovery, training and virus removal in Longmont, Boulder, Denver, Erie Colorado and the Colorado Front Range

All posts

Boulder/Longmont Computer Repair – PC with no hard drive used

Longmont Colorado PC Computer not using it’s hard drive:

Computer Physicians, LLC just worked on a unusual situation on a Zotac mini PC computer in Longmont, CO that had a boot windows drive that was filled up. I thought this would be good to share with my readers:

This very small Zotac mini PC computer running Windows 10 home with 4GB of RAM was booting to a 64GB memory chip located on the motherboard and was not using the 300GB internal SATA hard drive. As a result since the Windows OS was on a small 64GB memory chip it quickly got filled to capacity. I backed up the customer’s data to an external hard drive. The internal hard drive was not being used except for the storing of a few small files. I could not clone the 64GB memory chip but was able to transfer the OS using special disk software. I then needed to go into the BIOS and set the boot drive to the internal drive. The computer is running slower now since it is not using the small 64GB memory chip for windows and the CPU and computer itself is an inexpensive under-powered computer which was designed to run on the 64GB memory chip. The problem with this design is that the 64GB memory chip quickly gets filled to capacity. (Windows 10 uses a lot of hard drive memory most systems have 1000GB or more)

I do not like this design and would not recommend this Zotac computer to a client.

The computer will run faster if the original drive is replaced with a solid state drive and if the OS can be transferred and if more RAM memory is installed.

These are some of the situations that Computer Physicians, LLC runs into.

-Steve

All posts

Longmont’s Computer Physicians Computer Service and Repair in Longmont Colorado

Computer Physicians, LLC is a computer service company in Longmont, CO in business since 1999.

We provide computer repair and other services onsite at your location for same day service or in our workshop for the lowest cost in the area.

We also provide: Computer training, tutoring, help, upgrades, computer systems, rentals, sales, troubleshooting, performance improvement, cyber security, virus removal, networking, website development and hosting, internet setup, router and switch install and we can use our 1gbps upload and download internet service connection at our office for any fast internet needs you have. We are experts at Data Recovery of lost data and PC system crash recovery. We also develop, program and create Song Director and NameBase database software.

Computer Physicians services the entire Colorado front range. Our main technician and president is CompTia A+, MCP, MTA Microsoft certified professional with many college degrees in computers.

Call us today for any of your computer needs.

All posts

Longmont’s Newest Computer Viruses – Longmont/Boulder CO – Computer Physicians

Computer Repair Longmont, CO Virus removal. – Computer Physicians, LLC

Here is some news about the latest computer viruses out today that Computer Physicians in Longmont/Boulder, CO can help you with:

Technewsworld:

A new ransomware exploit dubbed “Petya” struck major companies and infrastructure sites this July 2017, following last month’s WannaCry ransomware attack, which wreaked havoc on more than 300,000 computers across the globe. Petya is believed to be linked to the same set of hacking tools as WannaCry.

Petya already has taken thousands of computers hostage, impacting companies and installations ranging from Ukraine to the U.S. to India. It has impacted a Ukrainian international airport, and multinational shipping, legal and advertising firms. It has led to the shutdown of radiation monitoring systems at the Chernobyl nuclear facility.

All posts

Trends in PC technology – Computer Physicians Longmont/Boulder/Erie, CO

Here is a good article which talks about the changes in PC technology and the trends.

All posts

Longmont Boulder Computer Repair Data Recovery -Video

Longmont Boulder Computer Repair Data Recovery PC service Virus removal.

https://www.computer-physicians.com/ in Longmont, Boulder, Erie, Denver, Colorado. Onsite at your location – we come to you! Onsite, in-shop or remote help. Video about Computer Physicians:

Longmont Boulder Computer Repair PC service Virus removal, Data Recovery https://www.computer-physicians.com/ in Longmont, Boulder, Erie, Denver, Colorado. Onsite at your location – we come to you! Onsite, in-shop or remote help.

All posts

Boulder/Longmont Computer Repair – History of the Computer – Computer Physicians, LLC

Boulder/Longmont Computer Repair – History of the Computer – Computer Physicians, LLC

Computer Physicians provides data recovery, computer troubleshooting, virus removal, networking and other computer fixes.

Here is a good article about the history of computers by marygrove.edu

History of the Computer

The history of the computer can be divided into six generations each of which was

marked by critical conceptual advances.

The Mechanical Era (1623-1945)

The idea of using machines to solve mathematical problems can be traced at least as

far back as the early 17th century, to mathematicians who designed and implemented

calculators that were capable of addition, subtraction, multiplication, and division.

Among the earliest of these was Gottfried Wilhelm Leibniz (1646-1716), German

philosopher and co-founder (with Newton) of the calculus. Leibniz proposed the idea

that mechanical calculators (as opposed to humans doing arithmetic) would function

fastest and most accurately using a base-two, that is, binary system.

Leibniz actually built a digital calculator and presented it to the scientific authorities

in Paris and London in 1673. His other great contribution to the development of the

modern computer was the insight that any proposition that could be expressed

logically could also be expressed as a calculation, “a general method by which all the

truths of the reason would be reduced to a kind of calculation” (Goldstine 1972).

Inherent in the argument is the principle that binary arithmetic and logic were in some

sense indistinguishable: zeroes and ones could as well be made to represent positive

and negative or true and false. In modern times this would result in the understanding

that computers were at the same time calculators and logic machines.

The first multi-purpose, i.e. programmable, computing device was probably Charles

Babbage’s Difference Engine, which was begun in 1823 but never completed. A more

ambitious machine was the Analytical Engine. It was designed in 1842, but

unfortunately it also was only partially completed by Babbage.

That the modern computer was actually capable of doing something other than

numerical calculations is probably to the credit of George Boole (1815-1864), to

whom Babbage, and his successors, were in deep debt. By showing that formal logic

could be reduced to an equation whose results could only be zero or one, he made it

possible for binary calculators to function as logic machines (Goldstine 1972).

First Generation Electronic Computers (1937–1953)

Three machines have been promoted at various times as the first electronic computers.

These machines used electronic switches, in the form of vacuum tubes, instead of

electromechanical relays. Electronic components had one major benefit, however:

they could “open” and “close” about 1,000 times faster than mechanical switches.

A second early electronic machine was Colossus, designed by Alan Turing for the

British military in 1943. This machine played an important role in breaking codes

used by the German army in World War II. Turing’s main contribution to the field of

computer science was the idea of the “Turing machine,” a mathematical formalism,

indebted to George Boole, concerning computable functions.

The machine could be envisioned as a binary calculator with a read/write head

inscribing the equivalent of zeroes and ones on a movable and indefinitely long tape.

2

The Turing machine held the far-reaching promise that any problem that could be

calculated could be calculated with such an “automaton,” and, picking up from

Leibniz, that any proposition that could be expressed logically could, likewise, be

expressed by such an “automaton.”

The first general purpose programmable electronic computer was the Electronic

Numerical Integrator and Computer (ENIAC), built by J. Presper Eckert and John V.

Mauchly at the University of Pennsylvania. The machine wasn’t completed until 1945,

but then it was used extensively for calculations during the design of the hydrogen

bomb.

The successor of the ENIAC, the EDVAC project was significant as an example of

the power of interdisciplinary projects that characterize modern computational science.

By recognizing that functions, in the form of a sequence of instructions for a

computer, can be encoded as numbers, the EDVAC group knew the instructions could

be stored in the computer’s memory along with numerical data (a “von Neumann

Machine”).

The notion of using numbers to represent functions was a key step used by Gödel in

his incompleteness theorem in 1937, work with which von Neumann, as a logician,

was quite familiar. Von Neumann’s own role in the development of the modern digital

computer is profound and complex, having as much to do with brilliant administrative

leadership as with his foundation insight that the instructions for dealing with data,

that is, programming, and the data themselves, were both expressible in binary terms

to the computer, and in that sense indistinguishable one from the other. It is that

insight which laid the basis for the “von Neumann machine,” which remains the

principal architecture for most actual computers manufactured today.

Second Generation Computers (1954–1962)

The second generation saw several important developments at all levels of computer

system design, from the technology used to build the basic circuits to the

programming languages used to write scientific applications.

Memory technology was based on magnetic cores which could be accessed in random

order, as opposed to mercury delay lines, in which data was stored as an acoustic

wave that passed sequentially through the medium and could be accessed only when

the data moved by the I/O interface.

During this second generation many high level programming languages were

introduced, including FORTRAN (1956), ALGOL (1958), and COBOL (1959).

Important commercial machines of this era include the IBM 704 and its successors,

the 709 and 7094. The latter introduced I/O processors for better throughput between

I/O devices and main memory.

Third Generation Computers (1963–1972)

The third generation brought huge gains in computational power. Innovations in this

era include the use of integrated circuits, or ICs (semiconductor devices with several

transistors built into one physical component), semiconductor memories starting to be

used instead of magnetic cores, microprogramming as a technique for efficiently

designing complex processors, the coming of age of pipelining and other forms of

3

parallel processing, and the introduction of operating systems and time-sharing.

Fourth Generation Computers (1972–1984)

The next generation of computer systems saw the use of large scale integration (LSI —

1000 devices per chip) and very large scale integration (VLSI — 100,000 devices per

chip) in the construction of computing elements. At this scale entire processors will fit

onto a single chip, and for simple systems the entire computer (processor, main

memory, and I/O controllers) can fit on one chip. Gate delays dropped to about 1ns

per gate.

Two important events marked the early part of the third generation: the development

of the C programming language and the UNIX operating system, both at Bell Labs. In

1972, Dennis Ritchie, seeking to meet the design goals of CPL and generalize

Thompson’s B, developed the C language.

Fifth Generation Computers (1984–1990)

The development of the next generation of computer systems is characterized mainly

by the acceptance of parallel processing. The fifth generation saw the introduction of

machines with hundreds of processors that could all be working on different parts of a

single program. The scale of integration in semiconductors continued at an incredible

pace — by 1990 it was possible to build chips with a million components — and

semiconductor memories became standard on all computers.

Sixth Generation Computers (1990–)

Many of the developments in computer systems since 1990 reflect gradual

improvements over established systems, and thus it is hard to claim they represent a

transition to a new “generation”, but other developments will prove to be significant

changes.

One of the most dramatic changes in the sixth generation will be the explosive growth

of wide area networking. Network bandwidth has expanded tremendously in the last

few years and will continue to improve for the next several years.